Hugging Face

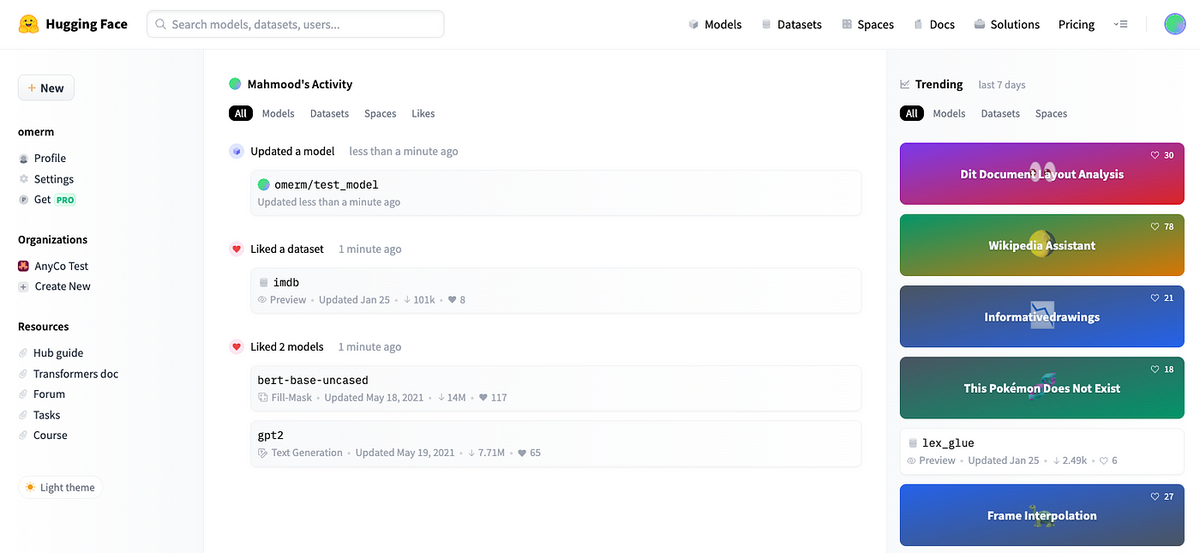

Discover, use, and deploy state-of-the-art machine learning models and datasets.

Official WebsiteWhat is it?

Hugging Face is a platform and community for machine learning developers and researchers. It's best known for its Transformers library, which offers thousands of pre-trained models for natural language processing (NLP), computer vision, and more. You can use Hugging Face models in Python or test them right in your browser.

How to use it?

Visit https://huggingface.co and explore models, datasets, and spaces. You can search for a task like 'text summarization' or 'image classification' and try the model online. To use it in code, install the Transformers library with pip, load the model, and run it in your Python app.

Why use it?

Hugging Face makes powerful AI tools easy to access. You don’t need to train models from scratch—it gives you ready-to-use models for translation, chatbots, sentiment analysis, and more. It’s popular for quick prototyping and real-world AI projects.

What can you do with it?

Hugging Face can: - Run text generation, classification, and translation - Perform question answering and summarization - Analyze sentiment or extract keywords - Work with images, audio, and code models - Host ML apps with Gradio using 'Spaces' - Fine-tune and share your own models

Pros

- Large community and model hub

- Thousands of open-source models

- Supports NLP, vision, audio, and more

- Great documentation and examples

- Easy web demos via Spaces

Cons

- May require Python and ML knowledge

- Some large models need good hardware

- API usage can cost money after free tier

- Not all models are production-ready

- Security depends on model authors

Pricing

Hugging Face offers many free tools. The Transformers library is open-source. Hosting apps on 'Spaces' has a free tier, and paid plans start at around $9/month. Their Inference API has usage-based pricing beyond the free limit. Academic and open-source users get extra support.